Sep 25, 2025

Product

We're thrilled to announce that Mercury Coder–Inception’s diffusion LLM (dLLM)–now provides unmatched Apply-Edit capabilities, with speed and quality far exceeding those of the leading Apply-Edit models.

Since announcing Mercury Coder in February, we've been steadily expanding its capabilities to support developer workflows. In April, with the release of our API, we introduced fill-in-the-middle (FIM) support, enabling Mercury Coder to power coding state-of-the-art auto-completion capabilities. And last month, in partnership with Continue, we launched support for Next-Edit, which entails practically recommending code changes. Developing Apply-Edit functionality is the next step towards a comprehensive coding dLLM that can handle the full spectrum of development workflows, from initial code generation to iterative refinement and debugging.

What is Apply-Edit?

Frontier LLMs excel at generating and editing complex code. In practice, however, using these edits requires integrating the generated code with existing files and code bases. That is, it requires applying edits.

Most coding models are poorly suited for the Apply-Edit task. Rather than re-writing large files, models like Claude and GPT tend to output comments like `# ... (rest of file unchanged)` surrounding the edited code. This makes sense in the context of online chat interfaces, given that reproducing large files is slow and expensive. But this behavior breaks coding agents. Moreover, using massive frontier models for a task that largely involves copying code is hugely inefficient.

Accordingly, Apply-Edit models are coding models specialized for, well, applying edits. They are far faster and cheaper than code generation models and are finetuned to ensure they output complete files, not just coding snippets. An Apply-Edit model can:

Pinpoint specific changes – Identify exactly which lines need modification

Preserve context – Maintain the existing code structure and style

Apply minimal edits – Make only the necessary changes, reducing the risk of introducing bugs

Mercury Coder’s Apply-Edit Capabilities

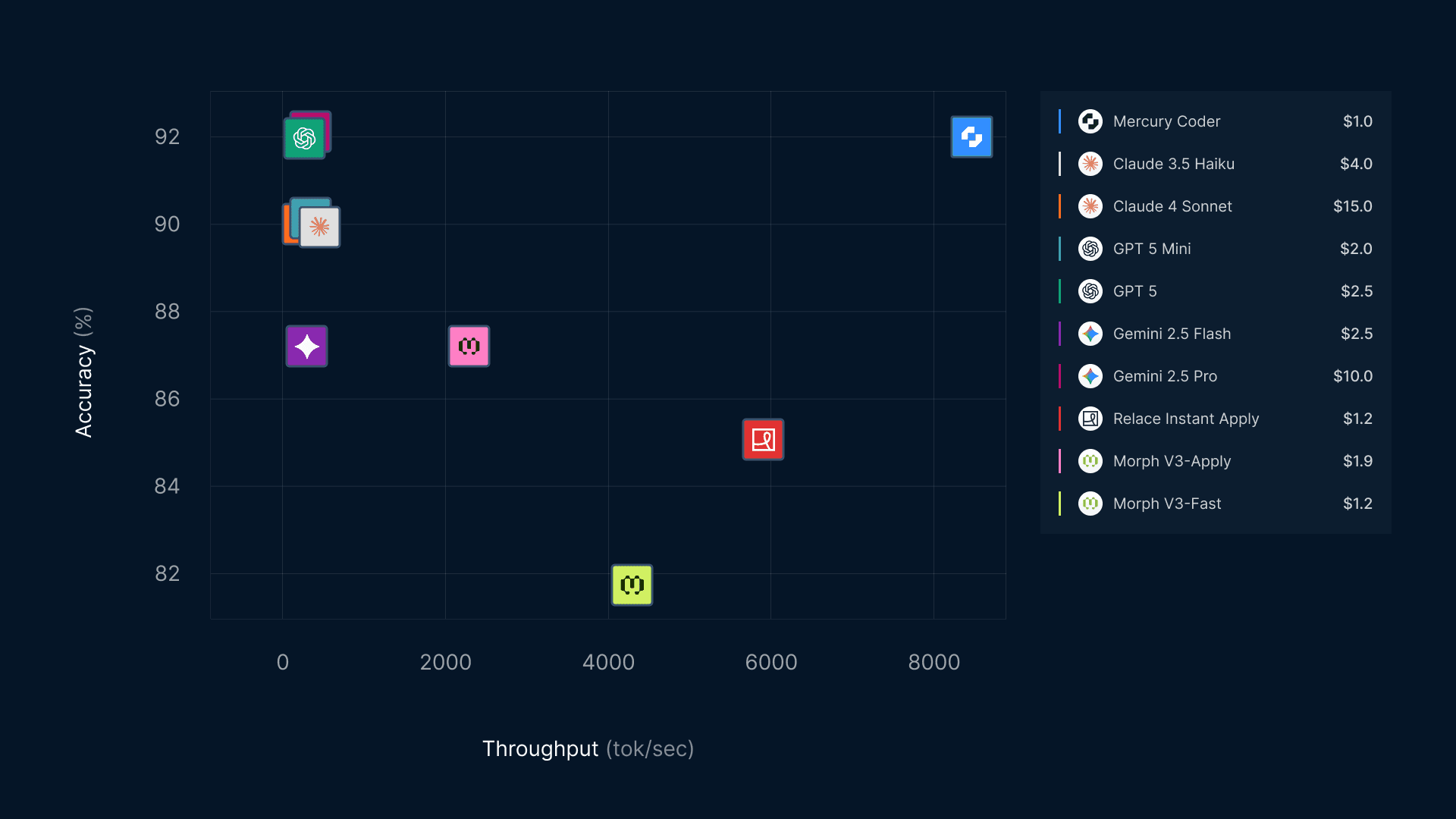

Mercury Coder outperforms both frontier LLMs and specialized Apply-Edit models. On a standardized dataset developed by Kortix AI, Mercury Coder accurately applies edits 92% of the time, matching the quality of frontier LLMs such as GPT-5, while running 46x as fast! And it runs faster than the latest Apply-Edit models from Relace and Morph while providing significantly higher quality. Overall, Mercury Coder strictly dominates other Apply-Edit models on speed, quality, and cost.

Using Apply-Edit

You can use Mercury Coder’s Apply-Edit functionality immediately through the Continue extension on VS Code. Just click apply after generating code to apply the changes.

To access Apply-Edit programatically, update your Mercury Coder endpoint to /v1/apply/completions.

Mercury Coder expects apply-edit requests to contain 2 sections: the original code and an update snippet. The model will intelligently merge the update snippet into the original code while preserving the code's structure, order, comments, and indentation.

The original code should be formatted as:

This should contain the current version of the code that needs to be updated. Replace {original_code} with your actual code content.

The update snippet should be formatted as:

This contains the changes or new code that should be merged into the original code. Replace [UPDATED CODE SNIPPET] with your actual update content. The Apply-Edit model will intelligently merge these changes while preserving the code's structure, order, comments, and indentation.

A complete request looks like this:

If you are an enterprise customer interested in Inception's dLLM technology, please reach out to us at sales@inceptionlabs.ai. And please share any feedback on our Discord.

Related Reading